Free AI tools for designers

May 9, 2025

AI is revolutionizing the design industry empowering designers with an array of powerful tools to streamline their workflows and enhance…

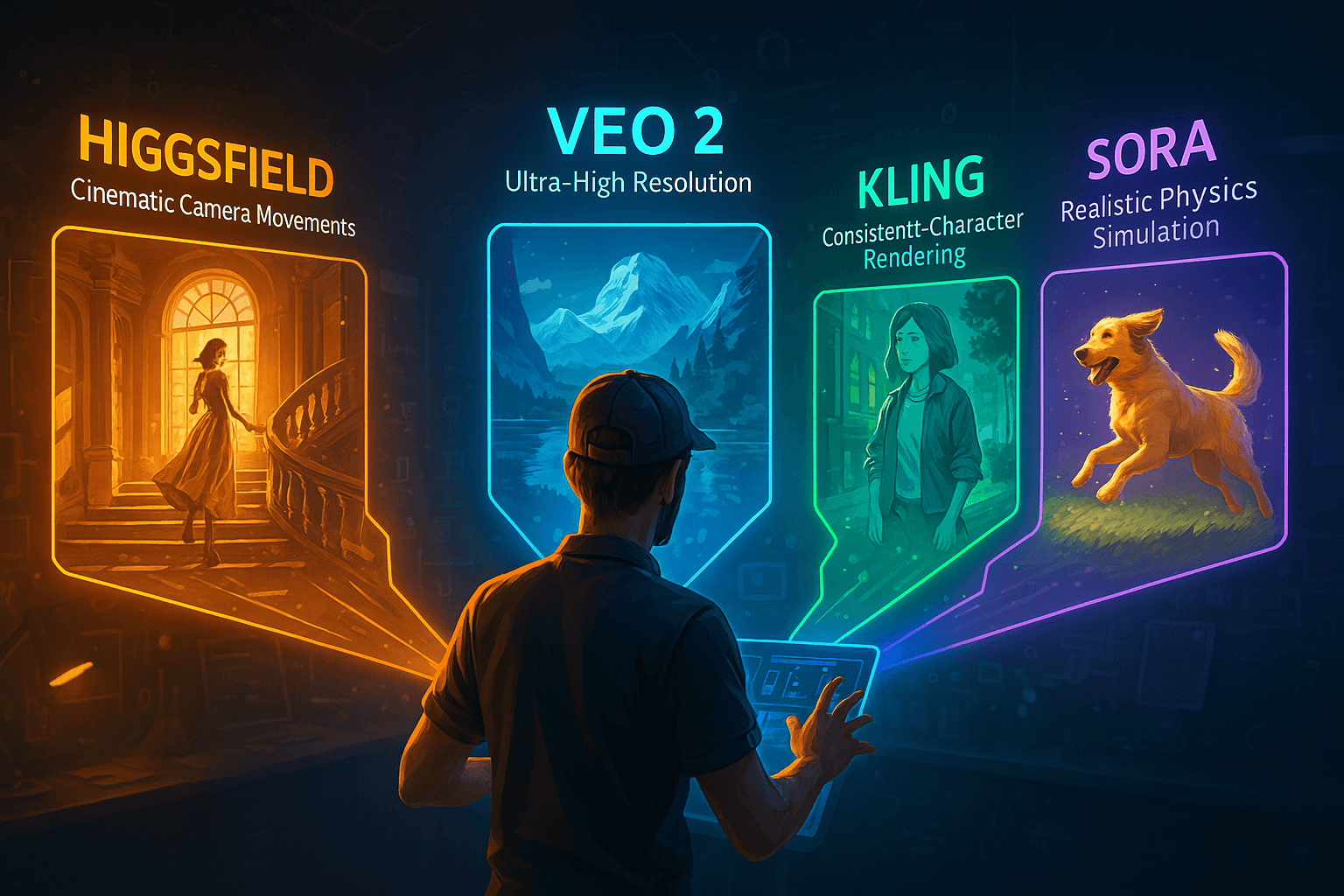

Few developments have captured the imagination quite like AI video generation. What once seemed like science fiction—the ability to create realistic, dynamic videos from simple text prompts—has become a remarkable reality. As we venture deeper into 2025, four AI video models stand at the forefront of this revolution: Higgsfield, Veo 2, Kling, and Sora. These sophisticated systems are not merely technological curiosities but powerful tools reshaping how we create, consume, and conceptualize visual content.

Each of these models represents a unique approach to the challenge of generating high-quality video content from text or image inputs. From Higgsfield's cinematic precision to Veo 2's technical prowess, from Kling's character consistency to Sora's world simulation capabilities, these models offer a glimpse into a future where video creation is limited only by imagination, not technical skill or resources.

This article explores the capabilities, strengths, and limitations of these groundbreaking AI video models, comparing their features and examining their potential impact on industries ranging from entertainment and marketing to education and personal content creation. As these technologies continue to advance at breathtaking speed, understanding their distinct characteristics becomes essential for creators, businesses, and technology enthusiasts alike.

Launched in early 2025, Higgsfield has quickly established itself as the filmmaker's AI of choice. Developed by a team led by Alex Mashrabov, former AI lead at Snap, Higgsfield distinguishes itself through its unprecedented level of cinematic control and camera movement precision.

At the core of Higgsfield's appeal is its NOVA-1 model, which delivers exceptional video quality with a particular focus on natural human movement and realistic physics. Unlike many competitors that struggle with human motion, Higgsfield excels at generating lifelike characters that move naturally through environments, avoiding the uncanny valley effect that plagues many AI-generated videos.

The platform's standout feature is its advanced camera control system, offering over 50 precision camera movements including dolly shots, whip pans, fisheye effects, arc movements, crane shots, and the distinctive Snorricam technique. This level of cinematographic control allows creators to achieve Hollywood-quality camera work without expensive equipment or technical expertise.

Higgsfield also offers what the company calls "world models" for consumers, enabling high-tier video generation and editing with remarkably consistent characters and scenes. The system's proprietary technology eliminates common AI video artifacts like glitching textures and "ghost" elements that momentarily appear and disappear.

Google DeepMind's Veo 2, released in December 2024, represents the tech giant's most sophisticated entry into the AI video generation space. Building on Google's extensive experience with machine learning and visual AI, Veo 2 combines technical excellence with accessibility.

Veo 2 stands out for its impressive technical specifications, generating videos at resolutions up to 4K with remarkably realistic motion and physics. The model currently outputs at 720p resolution at 24 frames per second in its standard configuration, with higher resolutions available for specific use cases and premium users.

The system introduces several groundbreaking features, including inpainting (automatically removing unwanted background elements, logos, or distractions), outpainting (extending the frame beyond its original boundaries), and interpolation (generating smooth transitions between still images). These capabilities make Veo 2 particularly valuable for video editing and enhancement, not just creation from scratch.

Google has focused on making Veo 2 accessible through its AI Studio platform, allowing users with varying levels of technical expertise to generate high-quality videos. The model demonstrates superior prompt adherence compared to competitors, accurately translating text descriptions into visual content with remarkable fidelity.

Developed by Kuaishou Technology and launched in its current form (version 1.6) in January 2025, Kling has carved out a distinctive niche in the AI video generation landscape by focusing on character consistency and extended video length.

Kling's technical capabilities include Full HD 1080p video generation with a frame rate of 30fps, allowing for smooth, high-quality output. The platform supports videos up to 2 minutes in length—significantly longer than many competitors—making it suitable for more complex narratives and extended scenes.

The model's standout feature is its Multi-Image Reference technology, unveiled in January 2025. This innovative system allows users to upload multiple images of the same subject (person, animal, character, or object), enabling the AI to maintain remarkable consistency throughout the generated video. This solves one of the most persistent challenges in AI video generation: keeping characters recognizable and consistent throughout a clip.

Kling also offers six types of camera movements (horizontal, vertical, zoom, pan, tilt, and roll) and a unique Motion Brush tool that allows users to design specific motion paths for elements within the video. This gives creators precise control over how objects and characters move through the frame, though not with the cinematic sophistication of Higgsfield.

Released to the public in December 2024 after months of anticipation, Sora represents OpenAI's ambitious entry into the video generation space. Building on the company's success with DALL-E and ChatGPT, Sora approaches video generation as a world simulation problem rather than simply a visual rendering task.

Technically, Sora generates videos at 1080p resolution with options for widescreen (1920x1080), vertical (1080x1920), and square formats. The platform currently supports videos up to 20 seconds in length, with plans to extend this duration in future updates. The model's outputs maintain consistent lighting, physics, and character appearance throughout the clip—a significant achievement in AI video generation.

What distinguishes Sora is its world understanding capabilities. Rather than treating video as a sequence of images, Sora builds an internal model of the physical world, including how objects interact, how materials behave, and how scenes evolve over time. This approach results in videos with remarkably consistent physics and natural movement, even in fantastical or impossible scenarios.

The platform includes several specialized features, including Remix (modifying existing videos), Re-cut (changing scene composition), Loop (creating seamlessly repeating clips), Storyboard (generating multiple scenes from a narrative), Blend (combining visual elements), and Style Preset (applying consistent visual aesthetics).

When comparing these four leading AI video models, several key factors emerge that differentiate their capabilities and ideal use cases.

In terms of video quality and realism, all four models produce impressive results, but with distinct characteristics. Veo 2 currently leads in pure technical quality, with its 4K resolution capability and exceptional physics simulation. Sora follows closely with its world simulation approach creating remarkably coherent scenes. Higgsfield excels specifically at human movement and cinematic aesthetics, while Kling offers excellent quality with particular strength in maintaining character consistency.

For ease of use and accessibility, Veo 2 takes the lead through Google's AI Studio integration, making powerful video generation available to users with minimal technical expertise. Kling offers a relatively approachable interface despite its Motion Brush's learning curve. Sora and Higgsfield target more professional users, with interfaces that offer greater control but require more technical understanding.

Each model also offers specialized capabilities that make them suitable for different projects. Higgsfield is unmatched for cinematic camera work and professional-looking movement. Kling excels at character consistency and longer-form content. Sora creates the most coherent narrative scenes with its world understanding. Veo 2 offers the most comprehensive editing features with its inpainting, outpainting, and interpolation tools.

The rapid advancement of AI video models like Higgsfield, Veo 2, Kling, and Sora points to an extraordinarily promising future for digital content creation. Based on current development trajectories, we can anticipate several exciting developments in the coming years.

By late 2025, we can expect these models to achieve near-photorealistic quality for most standard scenes, with video durations extending to 3-5 minutes without quality degradation. User interfaces will become increasingly intuitive, allowing creators with no technical background to produce professional-quality content through natural language instructions and simple controls.

Moving into 2026-2027, AI video models will likely incorporate real-time generation capabilities, enabling interactive applications and live content creation. We'll see deeper integration with existing creative software suites, allowing seamless workflows between AI generation and traditional editing tools. Character and scene consistency will improve dramatically, enabling coherent longer-form content like short films and educational videos.

The 2028-2030 period may bring truly transformative capabilities, including full-length feature film generation with consistent characters, settings, and narrative coherence. We'll likely see specialized AI video models for different industries—medical visualization, architectural rendering, educational content—each optimized for specific use cases and visual styles.

These advancements will democratize video production across numerous industries. In entertainment, independent creators will produce cinema-quality content without massive budgets. Education will benefit from customized visual learning materials generated on demand. Marketing teams will create personalized video content tailored to individual consumers. Healthcare professionals will use AI-generated videos for patient education and medical training.

As we've explored throughout this article, the latest generation of AI video models—Higgsfield, Veo 2, Kling, and Sora—represent a remarkable leap forward in what's possible with artificial intelligence and content creation. Each model brings unique strengths to the table: Higgsfield's cinematic precision, Veo 2's technical excellence, Kling's character consistency, and Sora's world simulation capabilities.

For content creators, the choice between these platforms depends largely on specific needs. Filmmakers and cinematographers will likely gravitate toward Higgsfield's unparalleled camera controls. Marketing teams seeking efficiency and reliability might prefer Veo 2's accessibility and technical quality. Character-driven storytellers will find value in Kling's consistency features. Conceptual artists and those pushing creative boundaries may be drawn to Sora's world understanding and narrative coherence.

What's particularly exciting is not just how good these models are today, but how rapidly they're improving. The pace of advancement suggests we're only beginning to scratch the surface of what's possible. Within a few years, these technologies will likely transform from impressive tools to essential components of the creative process across industries.

Perhaps most importantly, these AI video models are democratizing high-quality video production. Tasks that once required expensive equipment, technical expertise, and large teams are becoming accessible to individual creators, small businesses, and educational institutions. This democratization promises to diversify the voices and perspectives represented in visual media.

May 9, 2025

AI is revolutionizing the design industry empowering designers with an array of powerful tools to streamline their workflows and enhance…

May 5, 2025

Optimizing images has become a necessity for web developers and content creators alike With the rise of high-resolution displays and…

May 4, 2025

Freepik the popular design asset marketplace has recently unveiled F Lite an 'open' AI image generator trained on licensed data…